A Beginner's Guide: Things to Learn in AI, ML, and Large Language Models

- aimlfastrace

- Dec 1, 2024

- 11 min read

Updated: Dec 16, 2024

Introduction: A New Era of Learning

If you're interested in learning about Artificial Intelligence (AI), Machine Learning (ML), or Large Language Models (LLMs) from scratch to mastery, you're in the right place.

You can find the summary/explanation of this blog in the Youtube video here https://youtu.be/ks--VwVT9g4

Today, I will explain in detail the topics you need to learn to become an expert in AI. We will be learning topics which are crucial in implementing end to end AI applications as mentioned below:

Machine Learning

Deep Learning

Large Language models

MLOPS

1. What Exactly is AI and ML?

Artificial Intelligence (AI) is when machines or computers are made to think and make decisions like humans. Machines mimic human intelligence by learning from data, recognizing patterns, and making decisions based on that information.

Some of the AI applications are:

Transportation

Autonomous Vehicles: Self-driving cars and trucks use AI to navigate, avoid obstacles, and make driving decisions, aiming to reduce accidents and improve traffic flow.

Healthcare

Robotic Surgery: AI-powered robotic systems assist surgeons by providing enhanced precision, control, and visualization during complex procedures.

Retail

Personalized Shopping Experiences: AI-driven recommendation engines suggest products based on customer behavior, preferences, and purchase history.

Finance

Fraud Detection: AI systems can analyze transaction patterns in real-time to identify and prevent fraudulent activities.

Machine Learning (ML) is a subset of AI where computers learn from data without being explicitly programmed for every task. Think of it as teaching a computer to recognize patterns and make decisions based on examples.

Real-Life Examples:

Customer Sentiment Analysis: Analyzing customer reviews and feedback to understand sentiment and improve products/services.

Disease Prediction: AI models predict the likelihood of patients developing conditions such as diabetes, heart disease, or Alzheimer's based on their medical history, genetic information, and lifestyle data.

Chatbots and Virtual Assistants: AI-powered chatbots provide 24/7 customer support, answering queries. Virtual assistants, on the other hand, are more advanced and can perform a wider range of tasks beyond just chatting, like controlling smart home devices or setting reminders.

2. Language and Frameworks

Python is among the leading languages for Machine Learning due to its extensive libraries, vibrant community support, and ease of learning. Below are some essential libraries and tools you should be familiar with:

Numpy

Pandas

Matplotlib/Seaborn

Scikit-learn

Tensorflow/PyTorch

3. Building Blocks of AI & ML that you need to learn

Some of the important topics that we need to learn to understand Machine Learning are mentioned below:

Stats and Probability: Understanding Numbers

Before getting into the details of Machine Learning (ML), it's important to grasp some fundamental concepts in statistics: averages, percentages, and probability. These concepts help us assess the likelihood of various events and recognize patterns, which are essential for building and evaluating ML models.

Statistics is the study of data collection, analysis, interpretation and presentation. It helps us make sense of large amounts of data by summarizing it with measures like averages and percentages. For example, if we survey 100 people about their favorite ice cream flavor and find that 60 like chocolate, we can say that 60% of the surveyed group prefers chocolate.

Probability is the study of how likely events are to occur. It's the measure of the likelihood that an event will happen, expressed as a number between 0 and 1, where 0 means it will not happen and 1 means it definitely will. For instance, the probability of flipping a fair coin and it landing on heads is 0.5, or 50%, since there are two equally likely outcomes.

Together, statistics and probability help us understand and predict patterns and trends in data. For example, weather forecasts use past data and probability to predict the chance of rain.

Below are some links I would like to share to learn about statistics and probability:

Khan Academy - Statistics and Probability

StatQuest Channel (Don't think it's just a silly song 😊)

Coursera - Statistics and Probability

Algorithms:

Machine learning algorithms are like recipes that teach computers how to learn from and make decisions based on data, without being explicitly programmed for specific tasks. These algorithms recognize patterns and derive insights from the data they are given, enabling them to predict outcomes or classify information.

Machine learning models can be categorized as Supervised Learning (data having label) and Unsupervised learning (data not having label) .

Supervised learning problems can be further classified as Regression and Classification problems.

Regression problems involve predicting a continuous numerical value based on input features, such as estimating the price of a house. The output is a continuous variable, and common algorithms include linear regression, polynomial regression, and neural networks.

Classification problems involve predicting a discrete label or category for given input data, such as determining whether an email is "spam" or "not spam." The output is categorical, and common algorithms include decision trees, support vector machines, and neural networks.

An unsupervised model is a type of machine learning algorithm that works with data that does not have labeled outputs. In other words, the algorithm is given input data without any corresponding "correct answers" or target values. The goal of unsupervised learning is to find hidden patterns or structures in the data on its own. Common types of unsupervised learning are Clustering , Dimensionality reduction , Anomaly detection, Association Rule Learning.

To begin with you can explore Machine Learning models in scikit learn https://scikit-learn.org/stable/

Data Science: Essential for AI and ML

To teach machines, we need a lot of data. Data science is the field that makes this possible. It involves collecting, cleaning, and preparing data so that machines can use it to understand patterns and make decisions. This process ensures that raw data becomes something machines can work with effectively.

Go through the processing methods available in Scikit learn and Tensorflow.

4. Deep Learning

Neural networks have evolved significantly and are now central to many advanced AI applications. These models power technologies like self-driving cars, speech recognition, and image classification. Below are some of the most well-known and widely used types of neural networks and how it evolved.

Perceptron

The Perceptron is the simplest type of neural network and forms the basis for most of the models that followed. It is a binary classifier that takes input, processes it and outputs a prediction. While limited in its capabilities, the Perceptron was the first step in neural network development.

Feedforward Neural Network (FNN)

A Feedforward Neural Network is a basic architecture in which information moves in one direction, from input to output. Each layer in the network is directly connected to the one that follows, and there are no loops or backward connections. This means that once data enters the network, it flows straight through the layers in a single direction, making it a simple and effective architecture for tasks like image classification or pattern recognition.

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are specialized models used for analyzing images or videos. It consists of convolutional layers that apply filters to input data to capture spatial hierarchies and features, followed by pooling layers that reduce the dimensionality. CNNs are highly effective in tasks such as image recognition, object detection, and facial recognition due to their ability to automatically and adaptively learn spatial hierarchies of features.

Key Features:

Convolutional Layers: These layers focus on identifying features like lines, colors, or textures in the data. They work by sliding a small filter across the image to detect these features.

Pooling Layers: These layers reduce the size of the data, helping the model focus on the most important features and making the learning process more efficient.

Use Case:

CNNs are commonly used in tasks like recognizing objects in photos, sorting images into categories, or detecting faces in a crowd. CNNs are also used for text analysis, even though they are most commonly known for image processing.

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are designed for handling sequential data, such as time series, text, or speech. Unlike regular neural networks, where data flows only in one direction, RNNs have feedback loops that allow them to remember previous inputs. This unique structure enables them to retain context, making them perfect for tasks where the order and past information matter.

Key Features:

Memory: RNNs can "remember" previous inputs in the sequence, which helps in tasks like predicting the next word in a sentence or forecasting future trends in data.

Sequential Processing: They are designed to process data where the order is important, such as time-series data or text sequences.

Use Cases:

Language Modeling: RNNs can predict the next word or character in a sequence, which is useful for text generation or auto-completion.

Time Series Prediction: RNNs can predict stock prices, weather patterns, or any data that is recorded over time.

Music Generation: RNNs can generate music by learning patterns in existing musical sequences.

Then came Long Short-Term Memory (LSTM) Networks ,Generative Adversarial Networks (GANs),Autoencoders and Transformer Networks.

Transformer Networks

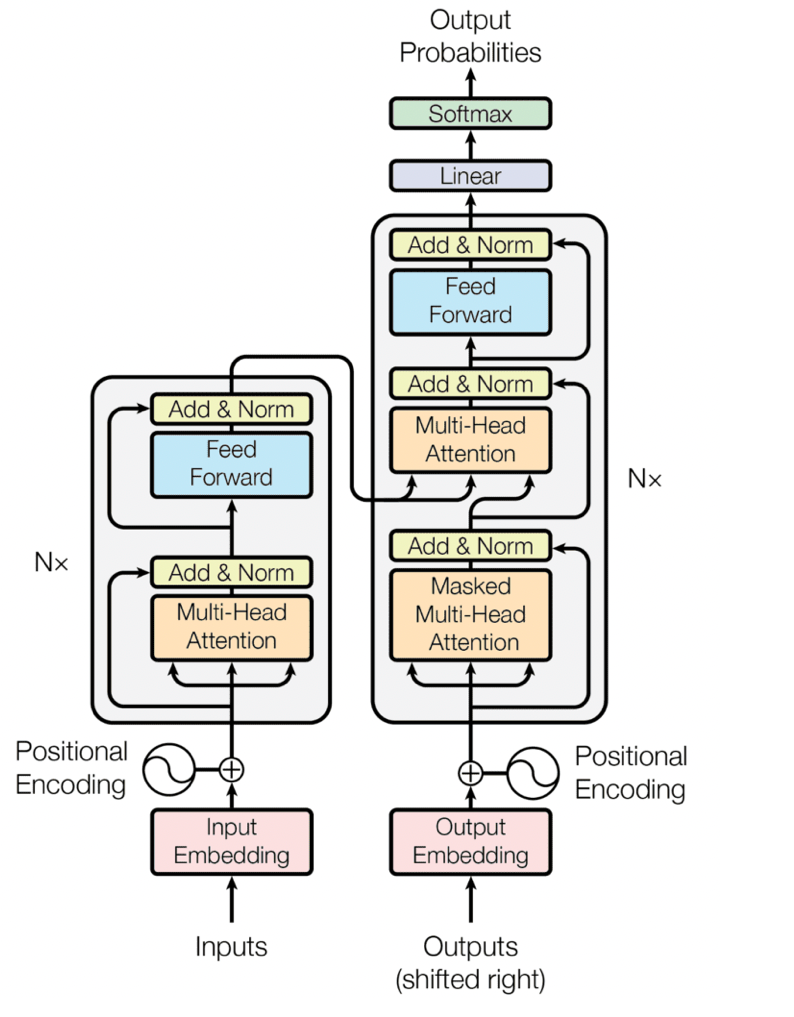

Transformer Networks are a type of neural network that changed how we handle tasks involving language. Unlike older models like RNNs and LSTMs, transformers can look at the entire text at once, making them much better at understanding complex language patterns. They use a technique called self-attention, which allows the model to focus on different words in a sentence and understand how they all relate to each other, no matter how far apart they are.

Key Features:

Self-Attention: This mechanism helps the model pay attention to all parts of the input at once, rather than reading it word by word. This speeds up processing and improves understanding.

Parallel Processing: Unlike older models, which process data one piece at a time, transformers can handle multiple pieces of data simultaneously.

Use Cases:

Text Generation: Writing new content, like generating news articles or completing sentences.

Question Answering: Understanding questions and providing accurate answers, like how Google or Siri understand and respond to questions.

Summarization: Automatically creating summaries of long articles or books.

5. Large Language Models (LLMs): The Power of Words

What Are LLMs?

Large Language Models (LLMs) are powerful deep learning systems that are trained on huge amounts of data. using transformers, which is made up of two main parts: the encoder and the decoder. These components work together to understand the meaning of words and their relationships in a piece of text. A key feature of transformers is their self-attention mechanism, which helps them focus on important parts of the text to understand the context better.

Transformers are also great at unsupervised learning, meaning they can learn from data without needing explicit instructions. Over time, they pick up on grammar, language rules, and basic knowledge, enabling them to understand and generate text more effectively.

Thanks to their architecture, transformers can handle extremely large models with billions of parameters, which makes them well-suited for tasks like language generation, translation, and understanding. This combination of speed, flexibility, and scale is what makes transformer-based LLMs so effective in various AI applications.

Popular LLMs:

GPT-4o (“o” for “omni”) : is the most advanced GPT model. It is multimodal (accepting text or image inputs and outputting text), and it has the same high intelligence as GPT-4 Turbo but is much more efficient—it generates text 2x faster and is 50% cheaper. Additionally, GPT-4o has the best vision and performance across non-English languages of any of other GPT models.

Gemini 1.5: Developed by Google DeepMind, is a state-of-the-art multimodal model that integrates advanced capabilities in both natural language processing and image generation. Released in December 2023, it aims to enhance human-computer interaction by understanding and generating complex text and images.

LLaMA 3: This model from Meta is growing in popularity because it’s flexible and easy to use. It’s open-source, meaning anyone can tweak it to fit their needs, making it great for creating specialized models.

Claude v2: Made by Anthropic, Claude v2 is designed to be safe and reliable when interacting with AI. It’s very efficient at tasks that require understanding and reasoning.

Gemma 2: Another model from Google, Gemma 2 is known for being great at processing language and making quick decisions, especially in real-time.

Falcon: This open-source model is created by the UAE’s Technology Innovation Institute. It’s great for analyzing large data and performing various AI tasks.

Mistral: This model focuses on being accurate in generating responses, especially for tasks like chatting with users and writing code.

Cohere: A newer startup model, Cohere is quickly becoming well-known for creating generative AI for businesses, known for its strong performance and accuracy.

T5 (Text-to-Text Transfer Transformer): This model by Google can handle many language tasks, such as translating text, summarizing information, and answering questions.

Use Cases:

Creating Marketing Content: LLMs can quickly generate text for ads and product descriptions. They’re great for producing lots of content fast while keeping it in line with the brand's tone.

Medical Research Summaries: LLMs can read and summarize medical research papers, helping doctors stay up-to-date. They can also write reports and help with diagnoses by analyzing patient data.

Custom Learning Materials: In schools, LLMs can make personalized study resources based on how students are doing. They can also create quizzes, explain difficult subjects in simple words, and give feedback on homework.

Analyzing Sentiment: LLMs can examine online reviews, social media, and other user content to understand how people feel about products or events.

Helping with Creative Writing: Writers use LLMs to come up with ideas, dialogues, or story plots. They can even help with writer’s block by suggesting ways to continue unfinished stories.

Chatbots and Assistants: LLMs power chatbots and virtual helpers, which assist in answering questions, providing advice, and performing tasks, improving customer service in many industries.

In short, LLMs are powerful AI tools that understand and produce human language, making tasks like chatting, translating, and answering questions faster and more natural.

7. MLOPS:

MLOps (Machine Learning Operations) is a combination of best practices that help teams work together more smoothly to build, deploy, and maintain machine learning models in real-world applications. It brings together the world of machine learning and IT operations to create a seamless workflow from development to deployment.

At a high level, MLOps focuses on:

Automation: By automating tasks like data preprocessing, model training, and testing, MLOps helps save time and avoid errors, making the process faster and more efficient.

Versioning: Just like how software developers keep track of changes in their code, MLOps ensures that the models, data, code and environment are versioned. This makes it easy to review changes, and if needed, go back to previous versions.

CI/CD for ML: This stands for Continuous Integration and Continuous Delivery. It’s a practice where the ML models are continuously tested and updated, allowing for quick deployment of new versions and ensuring that the model is always performing well.

Monitoring and Updates: Once a model is deployed, MLOps also keeps an eye on its performance. If the model’s performance drops, it gets updated with new data and retrained to improve its accuracy.

I will be using cloud platforms to explain more on this in my upcoming blogs.

8. Tools & Platforms:

To implement AI projects, you'll need the following tools:

Jupyter Notebook: is an interactive tool that allows you to write and run Python code in an easy-to-understand format. It’s a type of document where you can combine code, text, equations, and visualizations in one place. This makes it really useful for data science, machine learning, and even teaching, as you can see both the code and the results in the same window. You can install Anaconda and use the jupyter notebook

Google Colab: An online environment that allows you to run Python code and experiments without needing to set up anything on your local machine. It’s great for collaborating and using GPUs for deep learning tasks. Explore Google Colab.

Kaggle: A platform for data science projects where you can access datasets and find solutions from the community. It’s perfect for learning and applying machine learning techniques. Visit Kaggle.

Cloud Platforms (Azure,AWS, Google Cloud): These platforms allow you to scale your AI projects by providing powerful computing resources like CPUs, GPUs, and TPUs, which are essential for large-scale projects. Google Cloud, AWS and Azure are the popular cloud platforms.

7. Books for Deep Dives:

If you are interested in reading during your leisure time, I suggest you to read few books below:

"Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow" by Aurélien Géron: A practical guide for hands-on learners.

"Deep Learning with PyTorch" by Eli Stevens, Luca Antiga, and Thomas Viehmann

"Build a Large Language Model (From Scratch)" by Sebastian Raschka

Conclusion: The Future is Yours to Shape

The world of AI, ML, and LLMs offers endless opportunities. With the right tools and mindset, you can make a real impact. Follow my blogs for detailed explanations on each of these topics we discussed here. Keep learning and experimenting—you're at the beginning of an exciting journey.

Resources: All links mentioned in the blog

Statistics

Khan Academy - Statistics and Probability

Coursera - Statistics and Probability

Machine Learning

Tools

Practice Dataset and Use Cases

Visit Kaggle.

Cloud Platform

AWS,

Connect with me:

You can send the queries to kalpa@aiconnectgrow.com

Join our community by subscribing to the channel https://www.youtube.com/@AIConnectGrow_women

You can follow me in linkedin https://www.linkedin.com/in/kalpa-subbaiah/

Quiz

Which AI/ML concept do you find most exciting?

What's your next step in learning AI?

Let me know what topics are you interested in learning so that I can write my next blog on that 😊

Comments